|

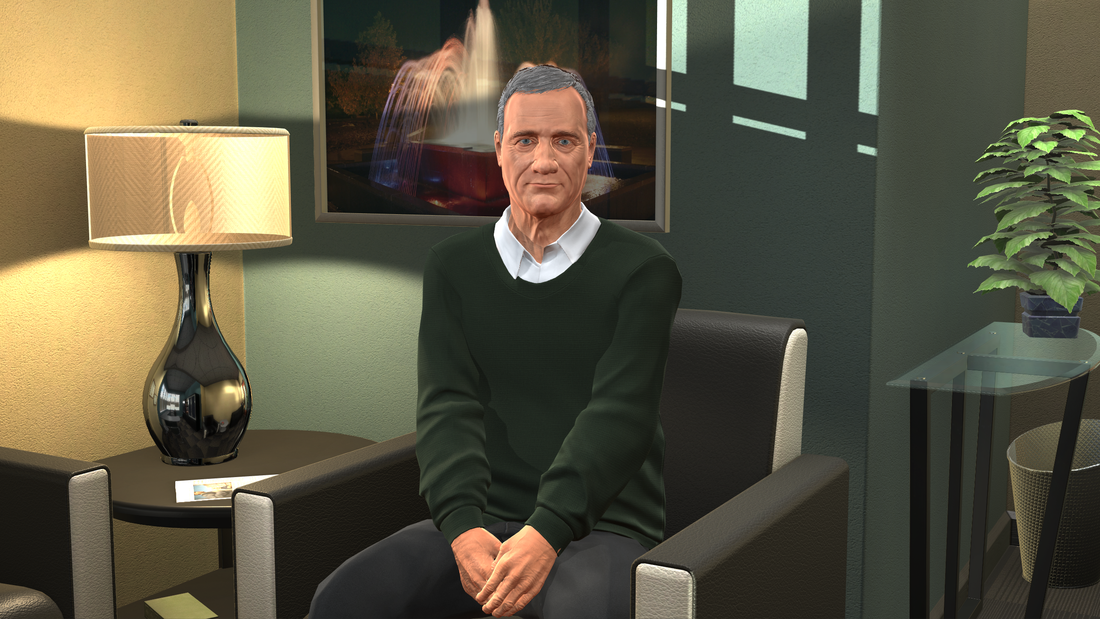

In the last few years, Virtual Reality (VR) and Artificial Intelligence (AI) have been two topics of huge interest across various industries. Both these topics are so broad in terms of their application areas that it is almost difficult to find an industry that would not benefit from the use of one or both of these technologies. Application areas range from entertainment, to analysis, to training, assessment, and everything else in between. But what if we could combine the power of these two technologies, augment it with human reasoning, and create applications that lend themselves to entertainment, analysis, training and assessment among other things? We open a new door that now requires insight into understanding human psychology and how people behave when interacting with these technologies. More importantly, it becomes critical to design how these technologies manifest themselves when interacting with people. I’ll try to explain this concept within the boundaries of what we currently do at Mursion - a VR and AI based training platform designed to help acquire skills through experiential learning. Mursion was built on the foundational principle that difficult conversations in real-life can be simulated before they occur, to safely inoculate against unpredictable behavioral changes in the presence of stress. Many of us would agree that we have been at the receiving end of such events more than once in our lives - be it at work, at home, or in a new geographical location where socio-cultural practices differ from our individual perceptions of the norm. While experiencing Mursion, learners’ beliefs that the simulation is real and is happening in their physical space are continuously reinforced - this concept was well studied and published by Mel Slater (described further in one of my previous blogs). This is achieved by carefully stimulating the visual and auditory senses of the learner using Virtual Reality and Artificial Intelligence.  Virtual Reality is used to first create an authentic setting in which a learner can be positioned. Avatars (digital representations of humans) that are driven by a combination of Artificial Intelligence and Human Reasoning present the learning challenge. Multi-exemplar training, 360 degree feedback, and reflection are used as powerful learning mechanisms to help learners prepare to deal with difficult situations they may encounter on the job. To do this, we follow certain key principles that we have learned by analyzing data (no personally identifiable data is ever collected) or seeking feedback from hundreds of learners experiencing the platform:

(i) We design simulation scenarios as short vignettes, no longer than 7 - 10 minutes long. The content of the scenarios is both relevant and of high impact with respect to the learner. Virtual Reality allows us to position the learner in this situation by helping modify the visuals to match the context in which the vignette is occurring. For example, a conflict with a co-worker must occur in the workplace of the learner and not in a generic setting. We go to great lengths to customize the simulation content and model these using reference photographs that are relevant to the vignette being designed. (ii) We use a combination of Human Reasoning and Artificial Intelligence to create avatars (digital representations of humans) that blend seamlessly into the simulation setting. While the mechanics of this blending are proprietary, spoken dialogue on the Mursion platform is not driven by AI (I’ve highlighted the reasons in a previous blog). In fact, we have learnt from data that language, accents, and even vocabulary during these highly engaging simulations play a huge role in reinforcing the authenticity of the interaction. The more authentic the simulation, the better are the learning outcomes. (iii) All scenarios have discretely measurable objectives / goals for the learner. We leverage this to implement a 360 degree feedback mechanism and rely upon reflection as a powerful learning mechanism. Learners receive a video of their performance in simulation that can be shared with peers, coaches and others to seek feedback while also watching themselves and taking notes that night help them get better in subsequent sessions. We are now testing a system that uses Artificial Intelligence to analyze the audio and video streams of the learners to present the learners with highlights of their interaction - micro interactions in which they had a positive impact or a negative impact on their avatar counterpart during the simulation. While we are still studying and refining our approach to this analysis, there is sufficiently positive evidence that this data can be used to provide workforce analytics that may help re-organize teams for maximum productivity/efficiency and so on. (iv) We design simulations in a manner that does not reward rule-governed behavior. Once again, experience and data has shown that there are multiple ways to achieve a certain outcome during difficult human conversations. My good friends, advisors, and mentors William Follette and Scott Compton have been at the forefront of helping us study the science behind this concept. As they would put it - “We can only tell you certain things you should not do during a conversation (e.g. do not dig your nose!) - everything else that you try in order to have a positive impact on your counterpart is fair game”. What is more, every conversation and every person is different - it is critical that you assess your own impact on others and change your behavior (verbal and non-verbal) accordingly until you achieve the desired outcome. (v) And finally, we rely on the concept of multi-exemplar training - learners are exposed to several simulations around particularly difficult situations, but each simulation may have a different learning outcome. No two simulations are ever the same (unless it is required for an assessment). Learners see a variety of demographics in the form of avatars, with each one posing a unique learning challenge. The more simulations a learner performs, the more prepared they can be to handle stressful conversations that may occur in the real-world. While there may be several other ways that AI & VR can be be combined depending on the context of the application, we, at Mursion, have encountered the need to study human psychology when using these powerful technologies. We have also learned that blending human reasoning with VR and AI can help create a technology that can be used in a plethora of ways. I will try to address some of these in a future blog. But without the science that helps us understand behavioral psychology when interacting with technologies, it is difficult to truly harness the impact they can provide. If you would like to learn more and see if such technologies may be relevant or impactful, please drop us a note and come see us at Mursion. We’re in the bay area and always happy to entertain visitors!

0 Comments

Your comment will be posted after it is approved.

Leave a Reply. |

AboutArjun is an entrepreneur, technologist, and researcher, working at the intersection of machine learning, robotics, human psychology, and learning sciences. His passion lies in combining technological advancements in remote-operation, virtual reality, and control system theory to create high-impact products and applications. Archives

April 2025

Categories

All

|

RSS Feed

RSS Feed